About me

Weikang (Zachary) is a third-year Ph.D. candidate (2023-) at SMULL Group of HITsz CS advised by Prof. Zheng Zhang. He received his B.S. degree in Information and Computational Science at Harbin Institute of Technology in 2023. His research interests lie in efficient training and inference algorithms for large-scale foundation models, particularly linear attentions.

News

- 2025.06: 📮📮 Our new work “NaLaFormer: Norm-Aware Linear Attention for Transformer Models” has been uploaded to arXiv.

- 2025.01: 🎉🎉 “PolaFormer: Polarity-aware Linear Attention for Vision Transformers” is accepted to ICLR’25.

Publications

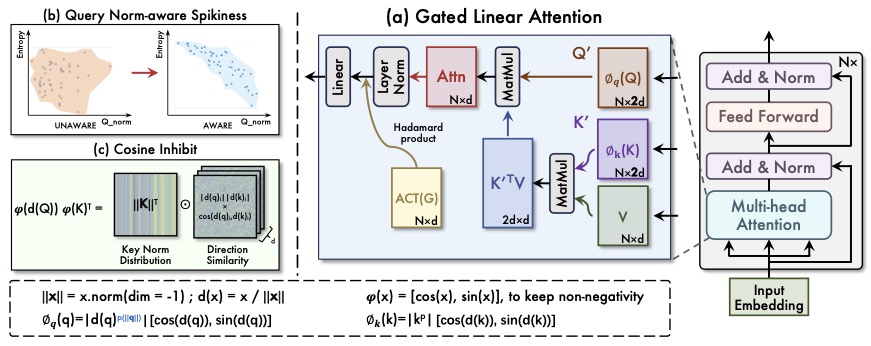

NaLaFormer: Norm-Aware Linear Attention for Transformer Models

Weikang Meng, Yadan Luo, Liangyu Huo, Yaowei Wang, Xin Li, Zheng Zhang

This work introduced a linear attention mechanism for Transformer-based models, called NaLaFormer. Based on the Positive Sequence Entropy (PSE), this work theoretically analysed the dynamic entropy reduction of softmax attention controlled by query norm and proposed the norm-aware kernel function. In addition, NaLaFormer utilized the Ptolemy’s theorem to keep the non-negative constrain of the attention weight.

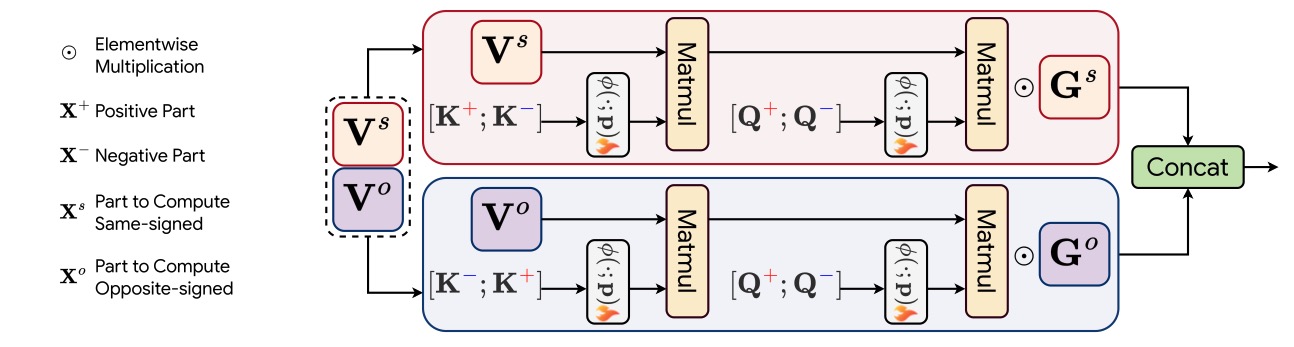

PolaFormer: Polarity-aware Linear Attention for Vision Transformers

Weikang Meng, Yadan Luo, Xin Li, Dongmei Jiang, Zheng Zhang

Project This work presented a novel attention mechanism with linear complexity called PolaFormer. Our PolaFormer computed the similarity in a polarity-aware form to avoid neglecting negatives, at the same time, we theoretically proposed a familty of element-wise functions to make the attention weight more spiky and employ a learnable power function for simplicity and rescaling.

Honors and Awards

- 2018.09, National High School Mathematics League, Provincial first prize

Educations

- 2023.08 - now, Ph.D student, Harbin Institute of Technology, Shenzhen, School of Computer Science and Technology, Computer Science and Technology

- 2019.08 - 2023.07, Undergrauate student, Harbin Institute of Technology, School of Mathematics, Information and Computational Science

- 2016.08 - 2019.07, Harbin NO.3 High School